We tend to underestimate combinatorial explosion.

For example, there is a somewhat famous anecdote which states that if you shuffle a standard deck of cards, then the sequence of cards you hold in your hand is unique in the history of mankind. Indeed, there are 52! ≅ 10^67 sequences possible, which will yield unique combinations with any good enough shuffle.

Another example is the famous XKCD about password strength which noted that sequences of 4 words from a 10,000 words dictionary yields harder passwords than pseudo-complex passwords mixing digits, letters, and special characters out of a single known word.

More recently, the advent of Large Language Models (LLMs) under the spotlight is highlighting the combinatorial nature of text. See, all that LLMs do is predicting the next word that should come after a bunch of words, according to what is usually seen in recorded language.

If that could be done purely statistically, that's how the LLM would do it. For example, if you want to complete the text "the whole nine", then you can make a table of what are the words that historically came after the text "the whole nine" in recorded language, and take the word with the most hits as a safe next word. In this case, that would be "yards", because of the English expression the whole nine yards, whose usage surely overwhelms any other text starting with "the whole nine".

The problem is that this method of looking up the statistics only works with very small sequences of words or very idiomatic expressions. With anything a bit more complex, there is no statistics at all, for the simple reason that the sequence of words has never been written or said before. This is what the "model" does in the LLM: it is trained on a large collection of inputs and associated outputs (sequences of words, and for each one of them the words that come next most of the time), and then is able to make a prediction about an input it has never seen before. That is the very nature of neural networks.

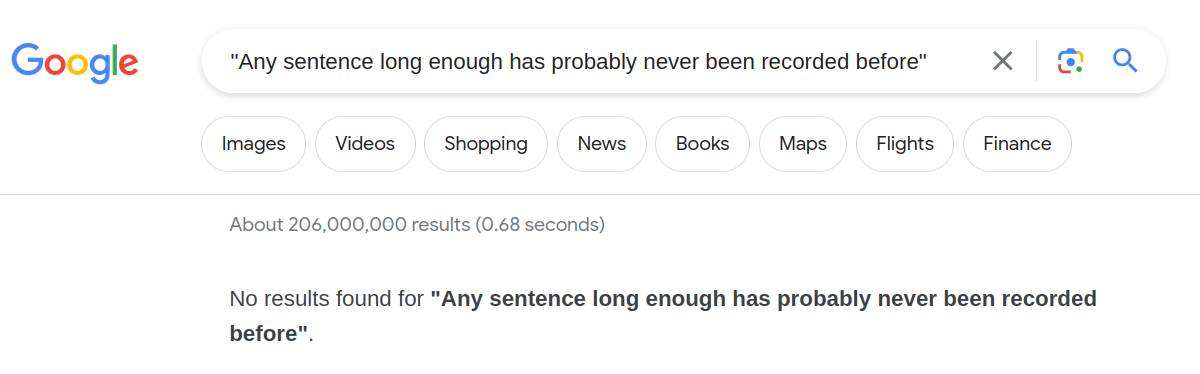

In the case of LLMs, the size of the input is called their "context window", which, currently, for GPT-4, is about 4,000 words. It seems obvious that 4,000 words are certainly unique in the history of recorded language and therefore that a model is required to predict what comes next. But what I find mind-blowing, is how few words you actually need before going out of recorded language. Any sentence long enough has probably never been recorded before.

Here we are, living our life, casually chatting and writing, shuffling the deck of language every time we deliver a sentence, not realizing that it has probably never been recorded before, and, if long enough, actually brand new in the history of language.