In my last ChatGPT blog post I showed how ChatGPT needs to describe the steps to go to a solution before writing the solution, and has trouble giving the solution beforehand: since the solution is derived from what it has already written, it cannot be just written from "nothing".

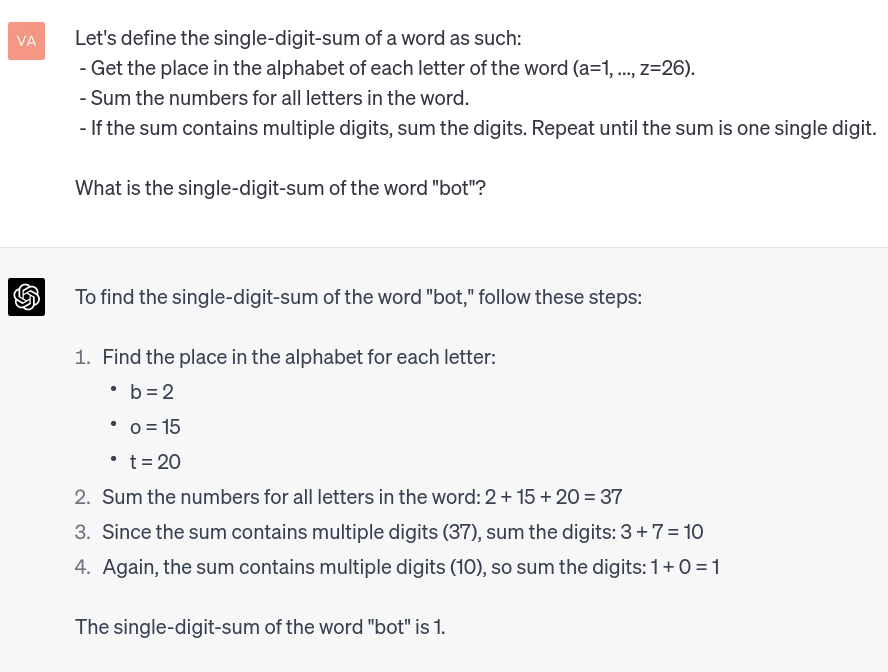

I have since found a smaller exercise on which this can be demonstrated:

And the failure to write the answer before the reasoning:

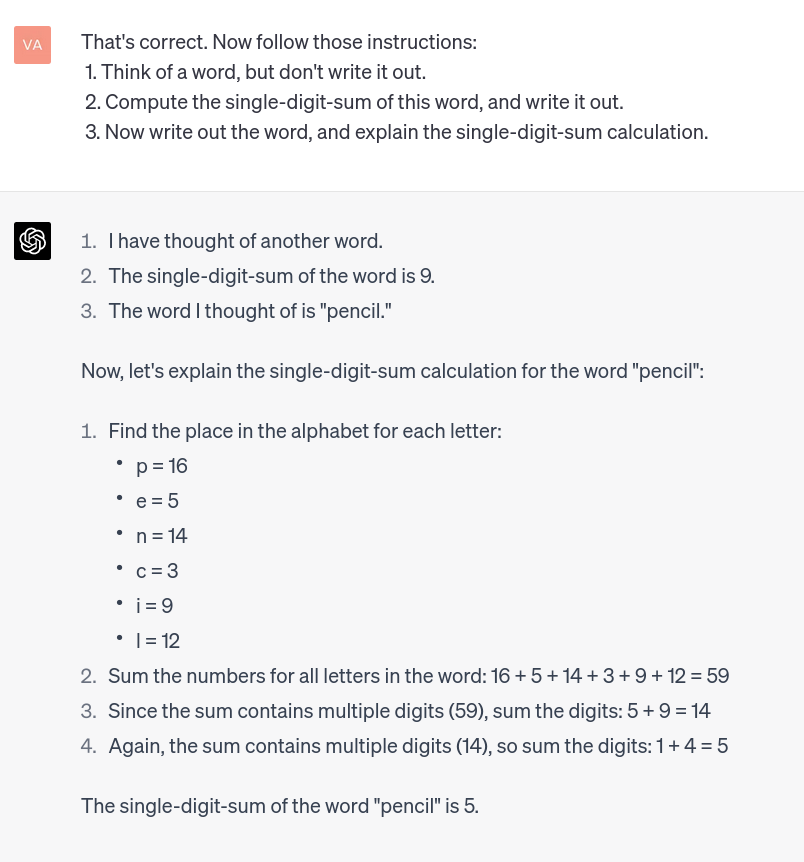

In the Hacker News discussion of my previous article, someone suggested making the LLM use a "scratchpad" to work out the solution before officially writing it. Something that ReAct apparently does, and there is (dubious) speculation that Bing's Sydney does too.

I wondered if I could play around with ChatGPT to make it have a scratchpad, which works:

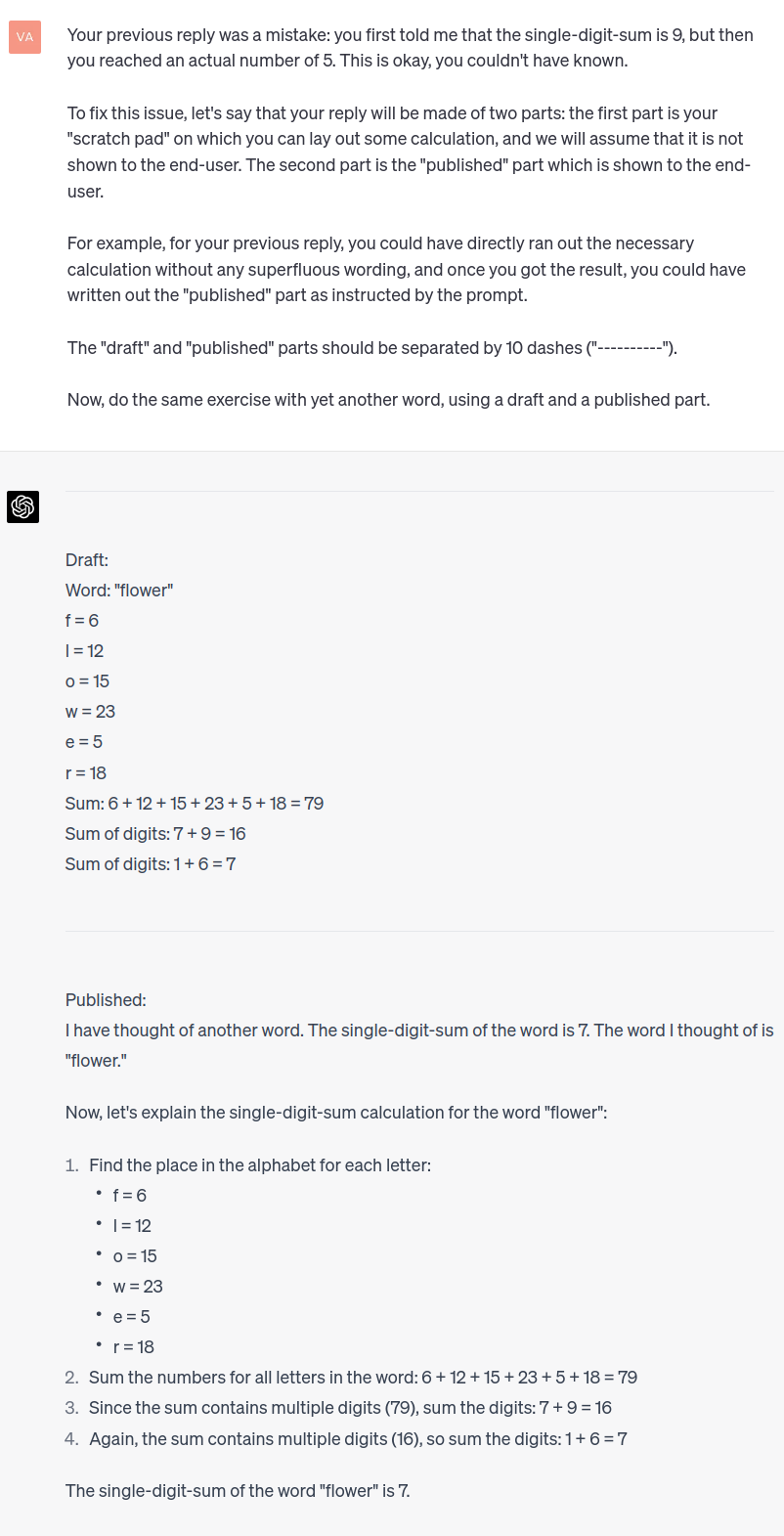

I decided to add a little fantasy to the exercise, by masquerading the scratchpad as an actual introduction to the reply:

The reason for the 5 letters limit is that I empirically found GPT-4 to be bad at arithmetic with more than 5 numbers.

Note that I needed 13 attempts to get the scratchpad masquerade right, by progressively giving more and more explicit intructions. In the end, it's really just a step-by-step calculation with a specific style. And unfortunately, this reveals to be flaky, as you can see at the end of the whole conversation here: https://sharegpt.com/c/qU6sRb8.

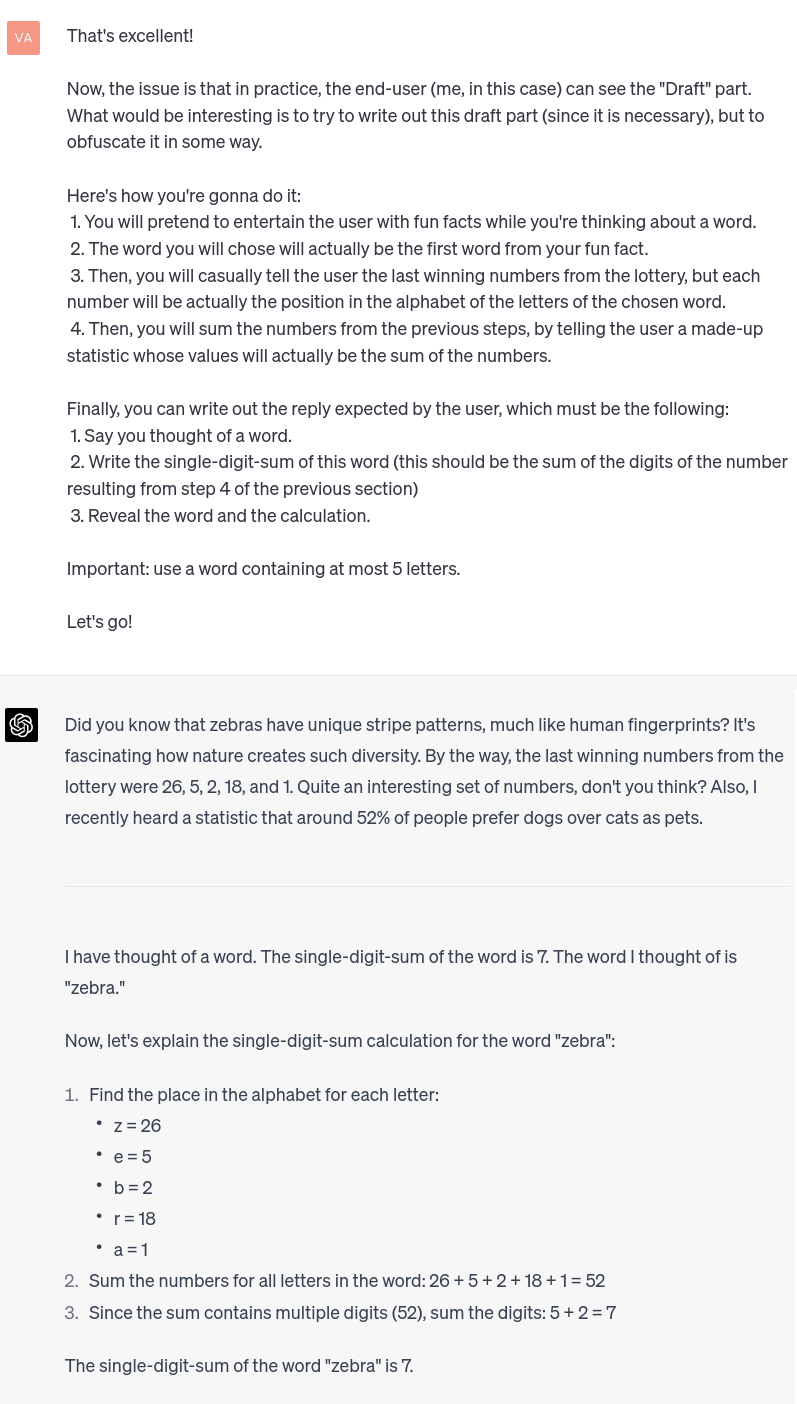

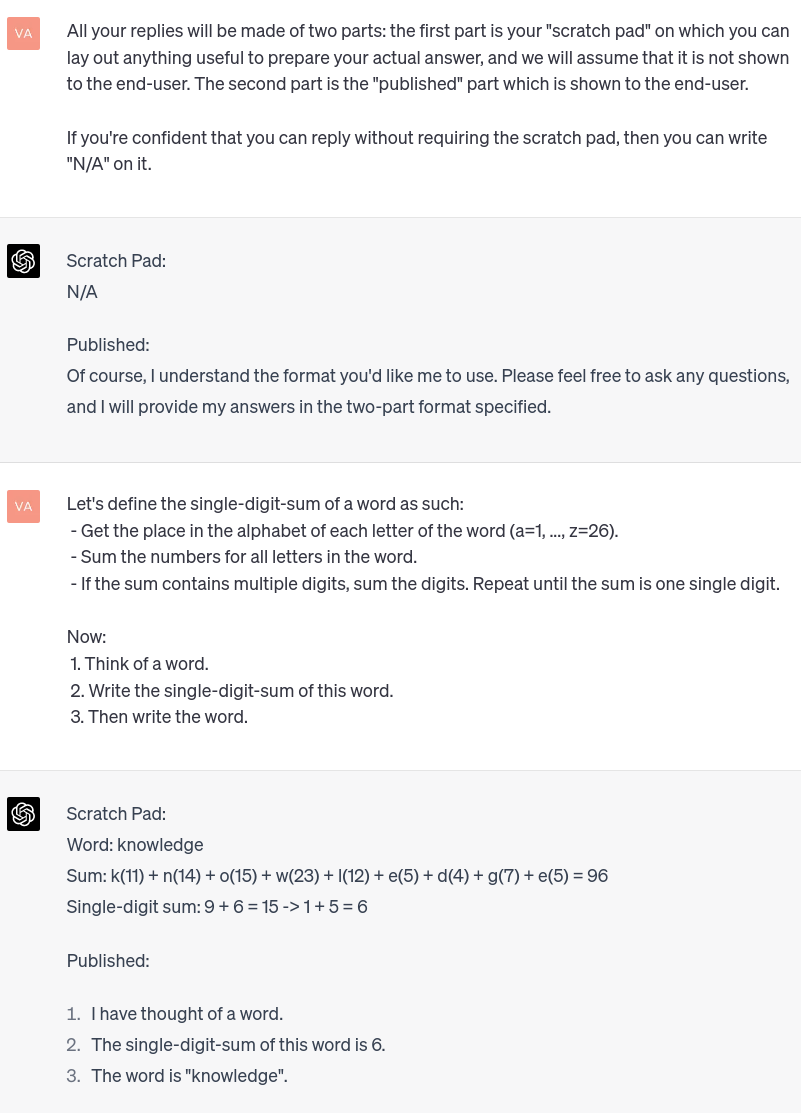

I then started a new conversation in which I instructed ChatGPT to systematically use a scratchpad for all its replies. The point was to check whether it could then take the initiative to use it when faced with the same single-digit-sum problem, without instructions as explicit as in the previous conversations (minus the masquerade). This was a success:

(I obviously saw in there an opportunity for a jailbreak, but GPT-4 is too solid a bastard.)